By using innovative image processing methods, accessibility features are collected and supplemented in automatically digitized building maps. The type of features collected, their required quality and use will be determined together with those affected and evaluated in pilot studies with the target groups.

The generated maps are made available in a barrier-free web application. The information on the maps should be individually adaptable to the current situation and the needs of the target group. Thus, all relevant route information can be researched independently, routes can be planned or a barrier-free representation of the building maps can be created in order to use them visually, tactilely or in spoken form at home or during the journey.

Long description

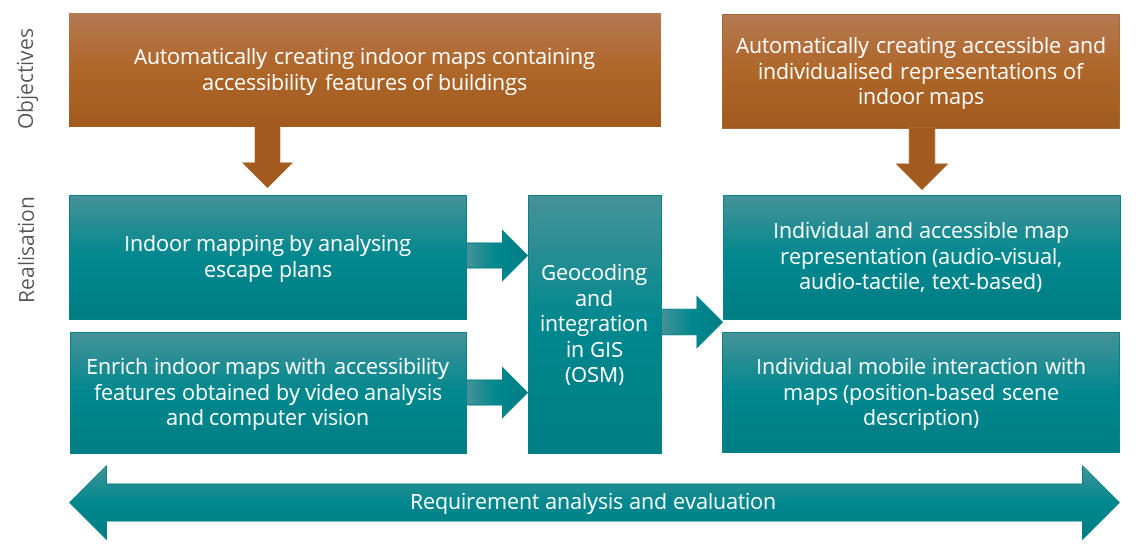

The diagram shows the schematic structure of the project plan of the research project ‘AccessibleMaps’. Project goals (orange) and realization steps (turquoise), which are arranged horizontally below the goals, are shown in the form of colored rectangles. The graphic contains arrows from the goals to the necessary realization steps. Below the realization phase, an arrow, which runs across the entire width of the graphic, containing the text ‘Requirements analysis and evaluation’ is shown. Two objectives are defined in horizontally arranged rectangles: 1. automatic creation of indoor maps containing accessibility features of the building and 2. automatic creation of individualised, accessible map representations. In order to achieve the first goal, two steps are required in the implementation phase (arrow down): 1. indoor maps by analysing escape plans and 2. enrich indoor maps with accessibility features obtained by video analysis and computer vision. From each of these two steps, an arrow leads to ‘Georeferencing and Integration in GIS (OSM)’. This is followed by two realization steps, which lead to the fulfillment of the second goal, the generation of different map representations. In the first step individual and accessible map representations (audio-visual, audio-tactile and text-based) are implemented. In the second step, individualised, mobile interaction with maps (position-based scene descriptions) will be realised.