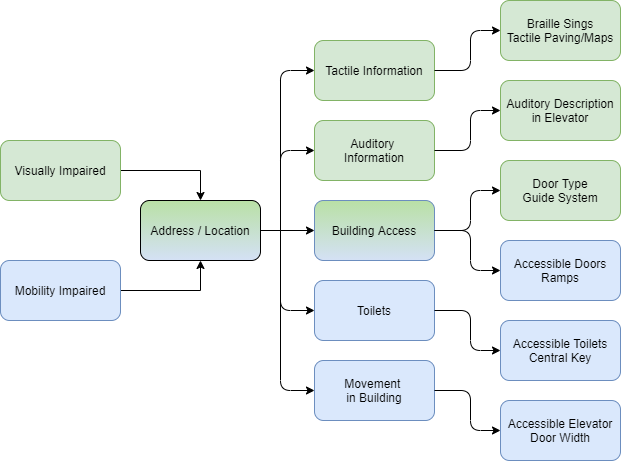

Next to the semi-automatic indoor map data collection and map creation process, the providence of collected information in an accessible manner for different target groups is a key aspect of the AccessibleMaps project. Henceforth, we aim to develop several solutions to make collected information on buildings and on accessibility features in buildings accessible to people with visual impairments, people with blindness and people with mobility impairment.

How can our diverse target group access the collected indoor map data?

Overall, several solutions are currently being researched, developed and tested in the scope of the AccessibleMaps project:

- Tactile Maps

- Digital Indoor Maps for People with Visual Impairments

- Accessible Mobile Interaction with Indoor Map Data

Tactile Maps

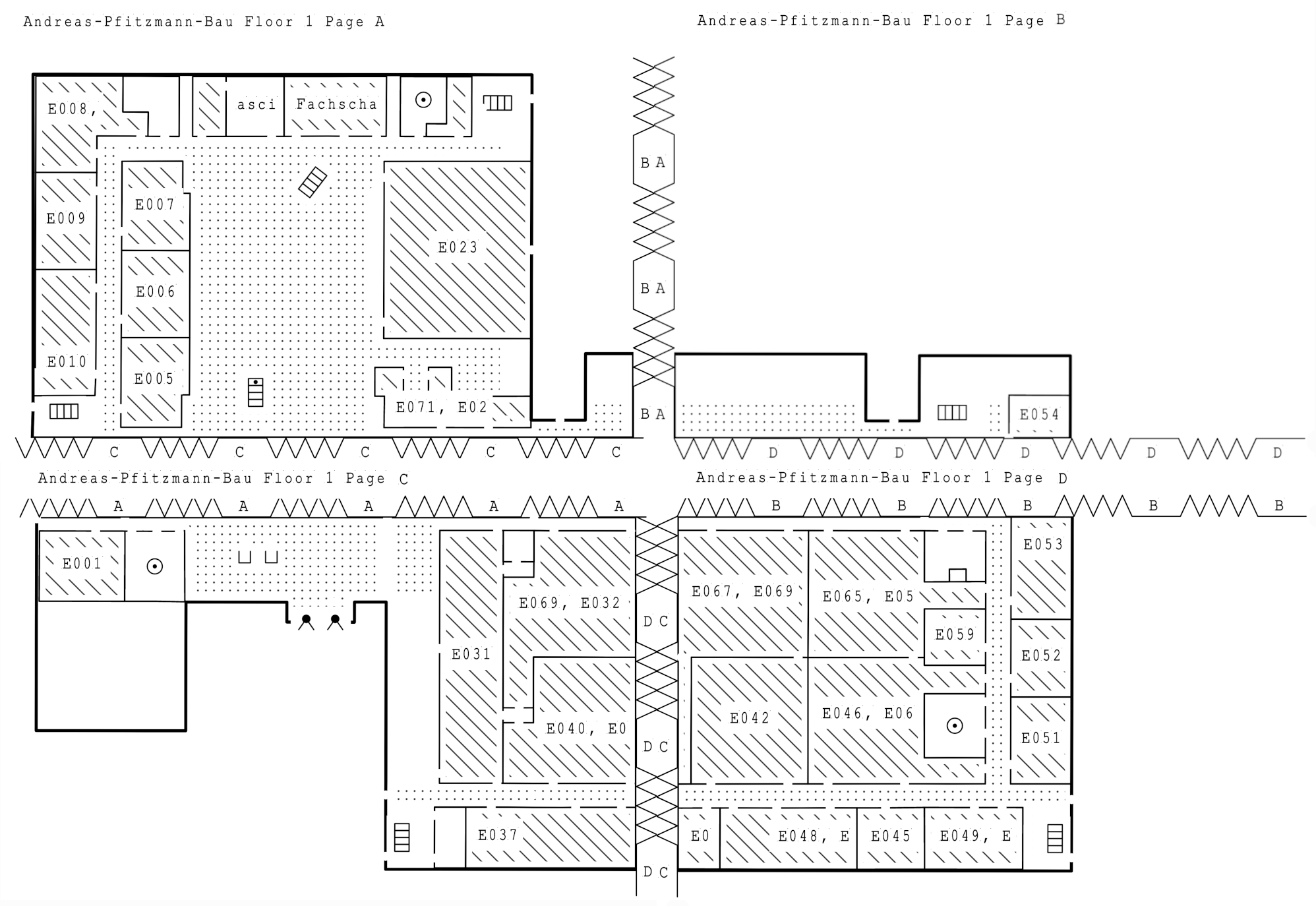

Tactile, haptic maps enable people with visual impairments or blindness accessing indoor map data. They consist of raised line patterns, symbols, and surface textures that replace colors to distinguish element classes from each other (e.g. toilets, entrances, stairs). Our goal is to create adapted tactile indoor maps based on OSM data in an automated way, making not only the map data but also the creation of tactile maps accessible.

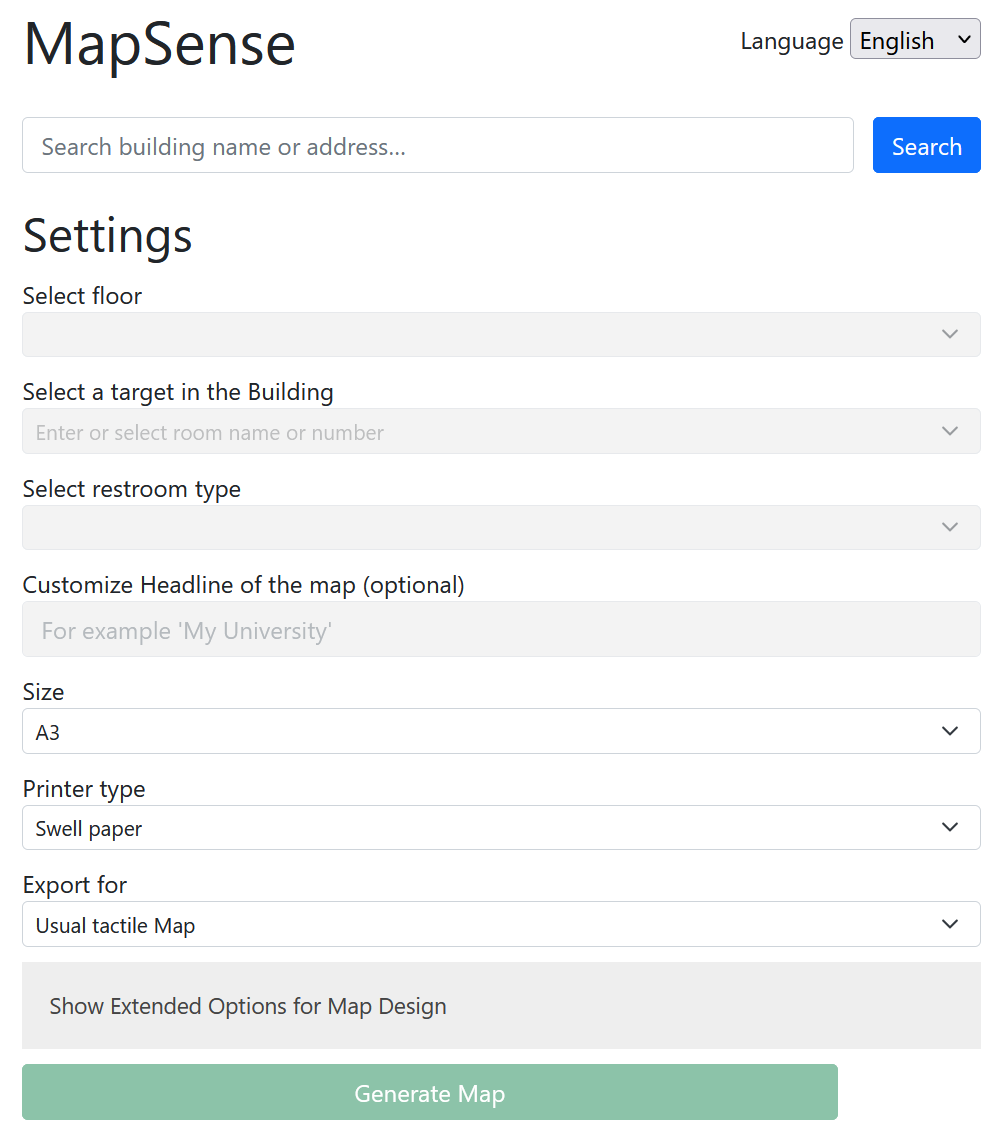

To achieve this, the MapSense prototype has been developed within the project. It aims to provide a fully automated process to generate adapted tactile representations from OSM data, which should not only be individually suitable for different use cases, but furthermore can also be created by people with blindness and visual impairment themselves, which becomes possible for the first time.

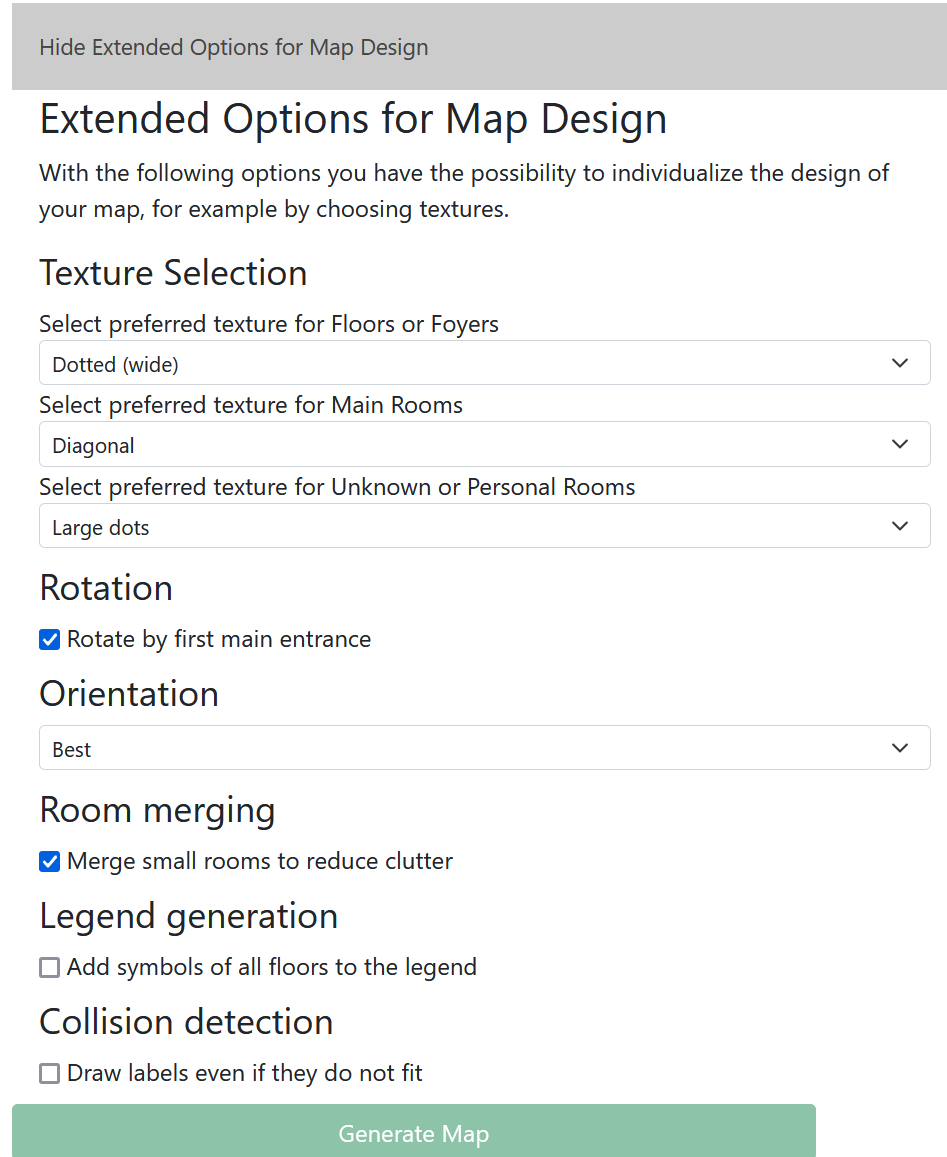

To ensure optimal positioning of Braille labels and symbols, a wide variety of algorithms are applied for scaling and splitting the maps, among others. Used symbols, textures and abbreviations are explained in an automatically generated legend.

MapSense is hosted as a web application with a user interface optimized for screen readers. Users can search for a building via a search box. Basic options, such as the to be rendered floor, a target within the building, the desired paper size and the print type (swell paper or embossed print) can be set via corresponding input elements. In addition, a number of advanced options are available to further customize the map to the individual needs of the users.

In addition to normal tactile maps, exports can also be generated for various end devices for audio-tactile exploration, such as the TactonomReader1, the Neo SmartPen2 or the TPad3.

The generated SVG files are made available for download as a ZIP file and can subsequently either be printed on swell paper with a standard printer and then swollen, or printed with an embossing printer.

MapSense enables, for the first time, the accessible creation of individualized, adaptive building maps for and by people with blindness and visual impairment, thus contributing significantly to improved mobility and independence for this target group.

In this demo video, the functionality of MapSense is illustrated using the Andreas- Pfitzmann-Bau as an example:

The following video demonstrates the TPad for audiotactile exploration of maps. The required map data can be generated with the MapSense application, for example.

Digital Indoor Maps for People with Visual Impairments

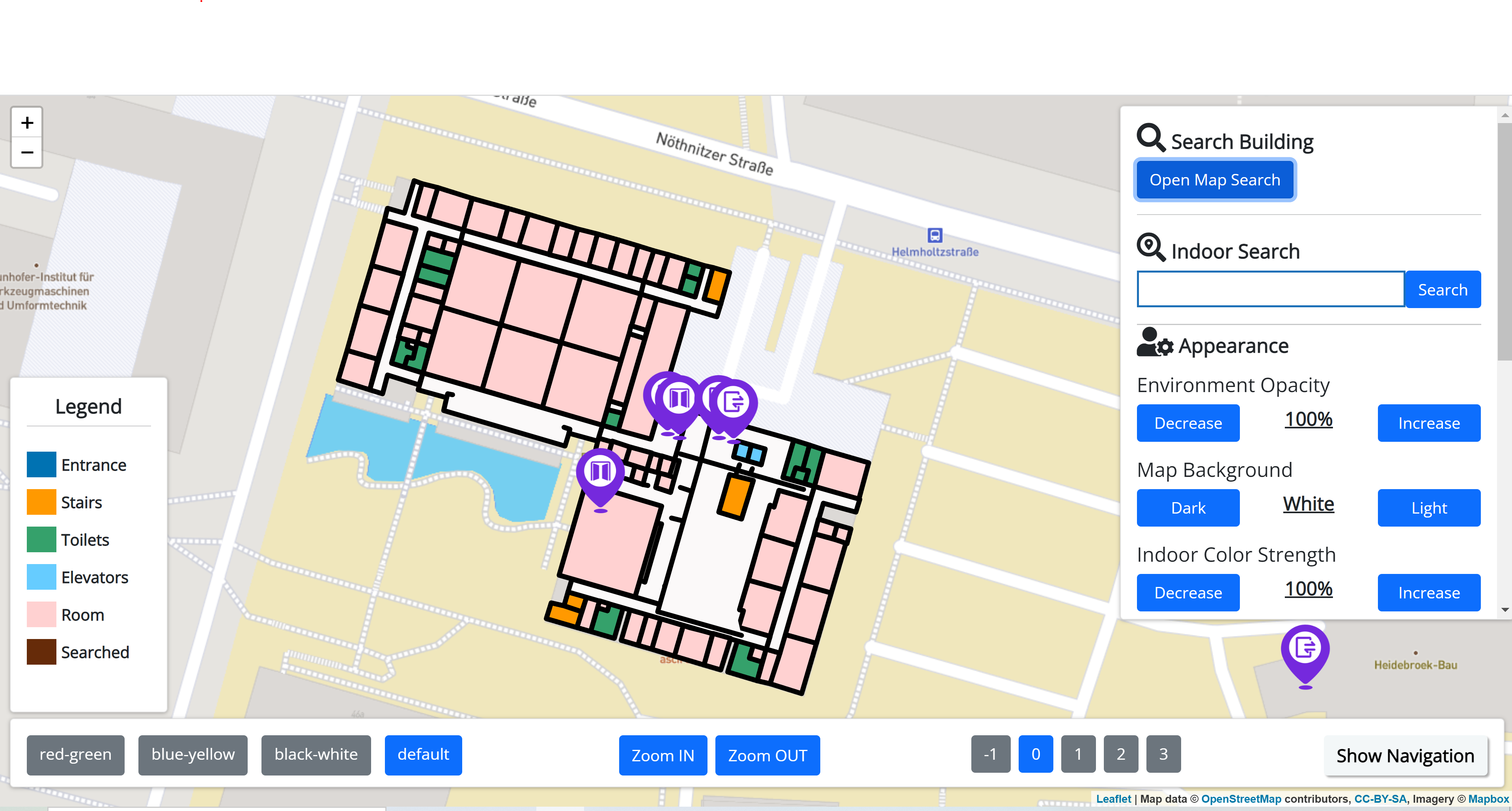

Common map representations allow interaction with a visual map and the information it contains. These are encoded using different symbols, map labels, or color schemes. These representations can be challenging for people with visual impairments, depending on the type of visual impairment. Low-contrast color representations or color representations that are unsuitable for people with color vision impairments often lead to difficulties in perception. The “AccessibleMaps” project, therefore, aims to explore solutions for optimized map representations for people with visual impairments. For this purpose, a first prototype was developed to explore concepts for adapted indoor map representations.

In this demo video the functionality of Mapable is explained using the example of the Andreas-Pfitzmann-Building of the TU Dresden:

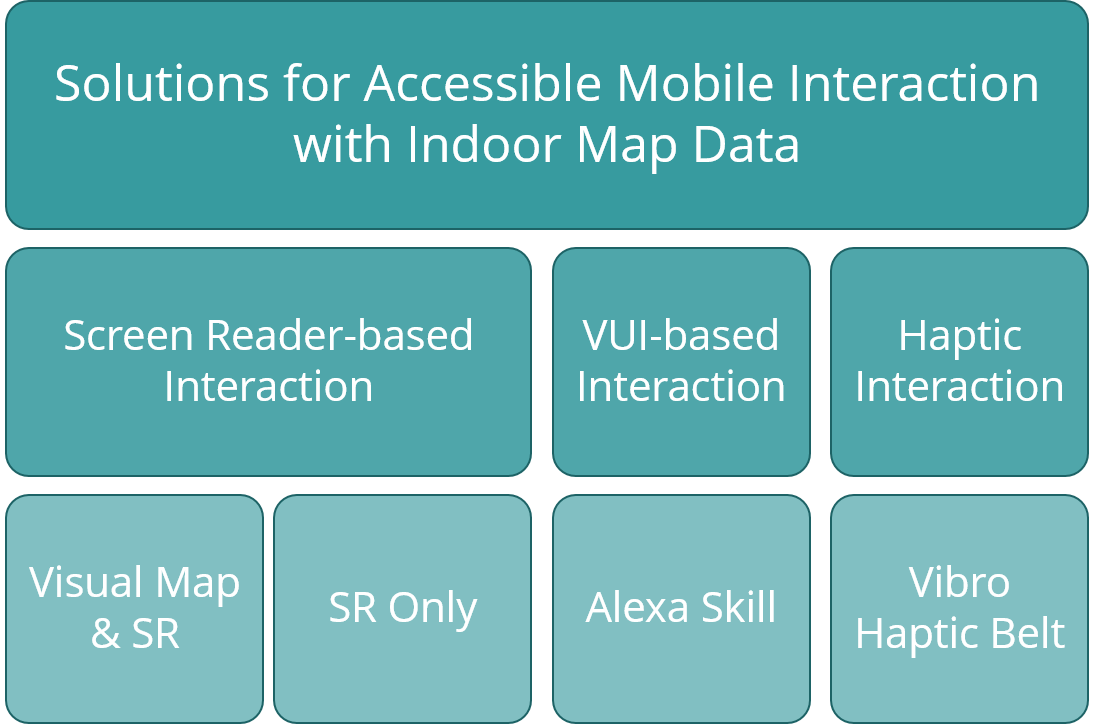

Accessible Mobile Interaction with Indoor Map Data

We investigated several solutions for the accessible interaction with digital map data on mobile devices. Of those, suitable approaches were chosen for further research and development.

Screen Reader-based Interaction

In order to make indoor map data accessible on mobile devices for both users with visual impairments and users with mobility impairments, we developed a system that combines the visual representation of maps with the compatibility for Screen Reader (SR) interaction.

As a result, one application is accessible for sighted people, people with mobility impairments and people with blindness. This furthermore enables the interaction between people with blindness / visual impairments and sighted people. E.g. a blind person can use the resulting application to ask a sighted person for orientation support inside a building and both parties can interact with the same mobile application, while the sighted person gives assistance.

Voice User Interface-based Interaction

As a Voice User Interface (VUI) can be accessible to people with mobility impairments, people with visual impairments and to blind people, it can be used to provide information on accessibility features and general information on buildings in an accessible manner.

Therefore, we ware currently developing a prototypical VUI that:

- supports users before/when visiting unknown buildings through the providence of information on accessibility features

- provides a natural dialog flow

- fits provided information to the users needs

In this demo video, the developed Alexa Skill is presented by example: